Microsoft has identified individuals from Iran, China, Vietnam and the United Kingdom as primary players in an alleged international scheme to hijack and sell Microsoft accounts that could bypass safety guidelines for generative AI tools.

In December, Microsoft petitioned a Virginia court to seize infrastructure and software from 10 unnamed individuals who the company claims ran a hacking-as-a-service operation that used stolen Microsoft API keys to sell access to accounts with Azure OpenAI to parties overseas. Those accounts were then used to generate “harmful content,” including thousands of images that violate Microsoft and OpenAI safety guidelines.

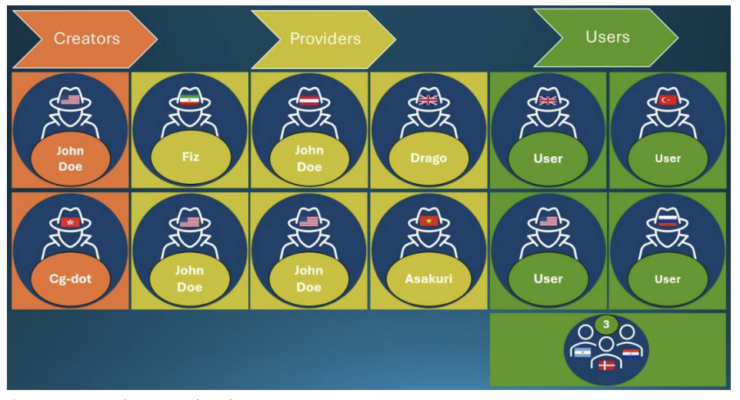

Previously, Microsoft said it did not know the names or identities of the 10 individuals or where they lived, only identifying them by specific websites and tooling they used and claiming that at least three appeared to be providers of the service who were living outside the United States.

In an amended complaint made public Thursday, the company identified four people — Arian Yadegarnia (aka “Fiz”) of Iran, Ricky Yuen (aka “cg-dot”) of Hong Kong, Phát Phùng Tấn (aka “Asakuri”) of Vietnam and Alan Krysiak (aka “Drago”) of the United Kingdom — as key players “at the center of a global cybercrime network” that Microsoft tracks as Storm-2139.

Microsoft also said it had identified one actor in Illinois and another in Florida as being part of the scheme, but declined to name them “to avoid interfering with potential criminal investigations.” The company said it is preparing criminal referrals to the United States and foreign law enforcement representatives.

The company did not specify how the generated images violated safety guidelines, but Steven Masada, assistant general counsel at Microsoft’s Digital Crimes Unit, indicated in a blog post that at least some were attempts to create false imagery of celebrities and public figures.

“We are not naming specific celebrities to keep their identities private and have excluded synthetic imagery and prompts from our filings to prevent the further circulation of harmful content,” Masada wrote in a blog Thursday.

The initial court action appears to have spurred some panic within the group, with Microsoft sharing screenshots of chat forums where members speculated on the identities of others named in the lawsuit. The group also posted personal information and photos of the Microsoft lawyer handling the case.

Some of those named also appear to have contacted Microsoft in an attempt to cast the blame on other members of the group or other parties.

One message received by Microsoft lawyers identified a Discord server allegedly run by Krysiak offering to sell Azure access for over $100, along with links to GitHub pages for their software and links to other resources. The user pleaded with Microsoft to investigate and offered to provide more information.

“The old guys you are trying to sue don’t even sell anything. These guys do,” the individual wrote, later adding “this is the real enterprise unlike the other group you are looking for.”

Another email sent to Microsoft lawyers advised them to “look for a guy named drago.”

“He is the guy you are looking for, he is using and selling Azure,” the email account whistling@dmc[]chat wrote.

According to the company’s original complaint, the individuals “exploited exposed customer credentials scraped from public sources to unlawfully access accounts with certain generative AI services.”

“They then altered the capabilities of these services and resold access to other malicious actors, providing detailed instructions on how to generate harmful and illicit content, including non-consensual intimate images of celebrities and other sexually explicit content,” the complaint claims.

As companies like Microsoft and OpenAI develop and sell generative AI tools, governments and civil society groups have pressured them to develop technical protections that make it harder to misuse or abuse their models to create deepfakes, spread disinformation or disclose potentially harmful information, like how to make a bomb or craft malware.

While some U.S. civil society groups have criticized AI companies for falling short on safety measures and a lack of transparency around public pledges to prevent their tools from being used to influence elections, U.S. intelligence officials last year indicated that foreign actors looking to meddle in American elections were having difficulty gaining access to high-quality commercial generative AI tools to power their disinformation campaigns.

The post Microsoft IDs developers behind alleged generative AI hacking-for-hire scheme appeared first on CyberScoop.

–

Read More – CyberScoop